I contributed to the article Advancing ethics review practices in AI research that was published in Nature Machine Intelligence. This initiative was organised and coordinated by Partnership on AI, a non-profit community of academic, civil society, industry and media organisations addressing challenging questions around the future of AI.

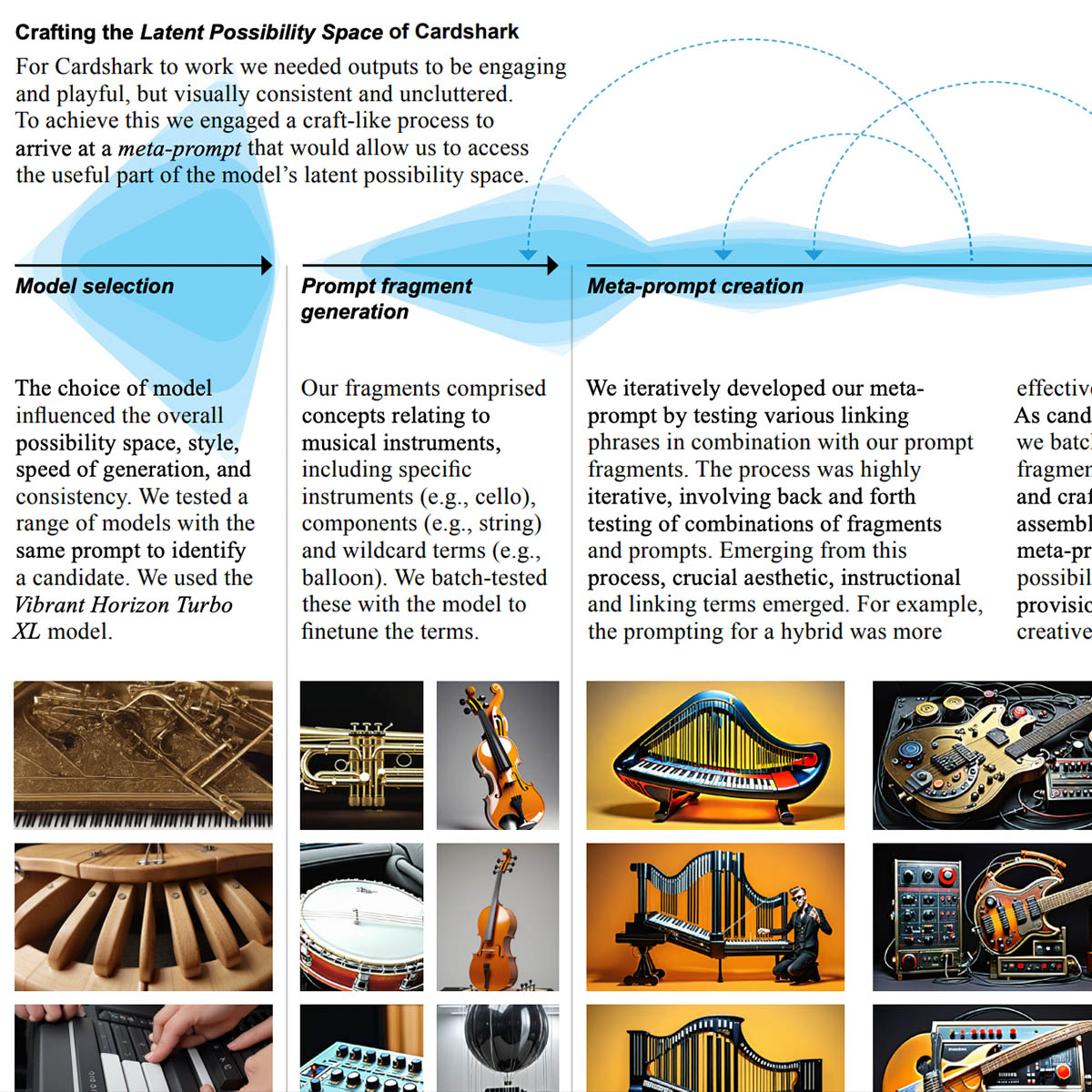

The work connects with other work I’m involved with via RAIL, the PETRAS UncannyAI project, the Trust me? I’m an autonomous machine project, the Consequences Schmonsequences that I coorganised at CHI 2021 and Jesse’s ongoing Entoptic Media work. The common interests is strategies to get the most out of emerging technologies like AI, in a way that doesn’t fall foul of any ‘Heffalump traps’.

The angle we explored in this piece is the idea that ethical review processes are a viable ‘lever’ which could be used to try and avoid unintended consequences and potential harms arising from AI innovation. By no means do I think this is the only mechanism by which we should be careful with AI, but it’s certainly a useful bit of machinery.